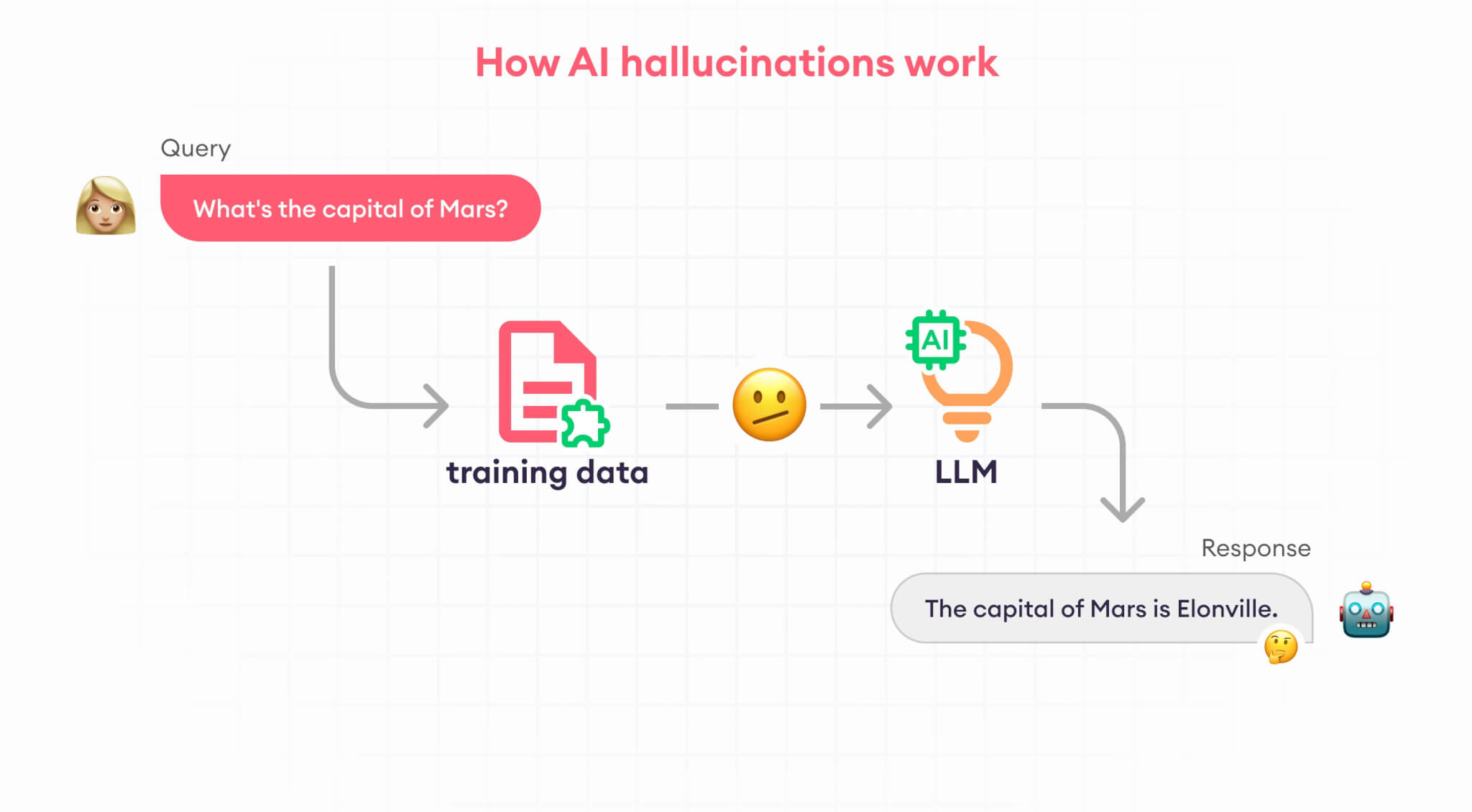

In the realm of artificial intelligence (AI), hallucination refers to instances where AI models generate outputs that are incorrect, nonsensical, or not grounded in the provided input data. Unlike human hallucinations, which are perceptions without external stimuli, AI hallucinations result from data and algorithmic anomalies within the AI system.

Understanding AI hallucination is crucial in today’s AI-driven world, as it affects the reliability and trustworthiness of AI applications. From autonomous vehicles to medical diagnosis systems, ensuring AI accuracy is vital for safety and efficacy. Yes, it makes for funny memes at times but we cannot neglect the potential backfires it will have in our world.

Data-Related Causes

AI systems rely heavily on the data they are trained on. Insufficient or biased training data can lead to AI hallucinations. If the training data does not cover the full range of scenarios the AI might encounter, the model may generate incorrect outputs when faced with unfamiliar situations.

Overfitting, where the model learns the training data too well and fails to generalise, and underfitting, where the model is too simplistic to capture underlying patterns, are both common issues that can cause hallucinations.

Algorithmic Causes

The limitations of current AI algorithms also contribute to hallucinations. Most AI models, especially deep learning models, are complex and operate as “black boxes”, making it difficult to understand how they arrive at specific outputs.

When these models encounter edge cases or outliers that fall outside their training data, their predictions can be wildly inaccurate. The inability to adequately handle such cases is a significant cause of AI hallucination.

Systematic Errors

Systematic errors, including inaccurate assumptions during model development and bugs in code implementation, further exacerbate AI hallucinations. The model’s outputs can be consistently incorrect if the foundational assumptions about data relationships or algorithmic behaviours are flawed. Coding errors, which are often subtle and challenging to detect, can also lead to unexpected and erroneous AI behaviour.

Impact on AI Applications

AI hallucinations can severely impact various AI applications. For instance, in autonomous vehicles, a hallucination could cause the vehicle to misinterpret road signs or obstacles, potentially leading to accidents. In medical diagnosis, AI hallucinations could result in incorrect diagnoses or treatment recommendations, jeopardising patient safety. Chatbots and virtual assistants might produce irrelevant or nonsensical responses, diminishing user experience and trust.

Notable incidents include self-driving cars misidentifying objects on the road and AI-powered diagnostic tools providing incorrect medical advice, highlighting the critical need to address AI hallucinations.

Trust and Reliability Issues

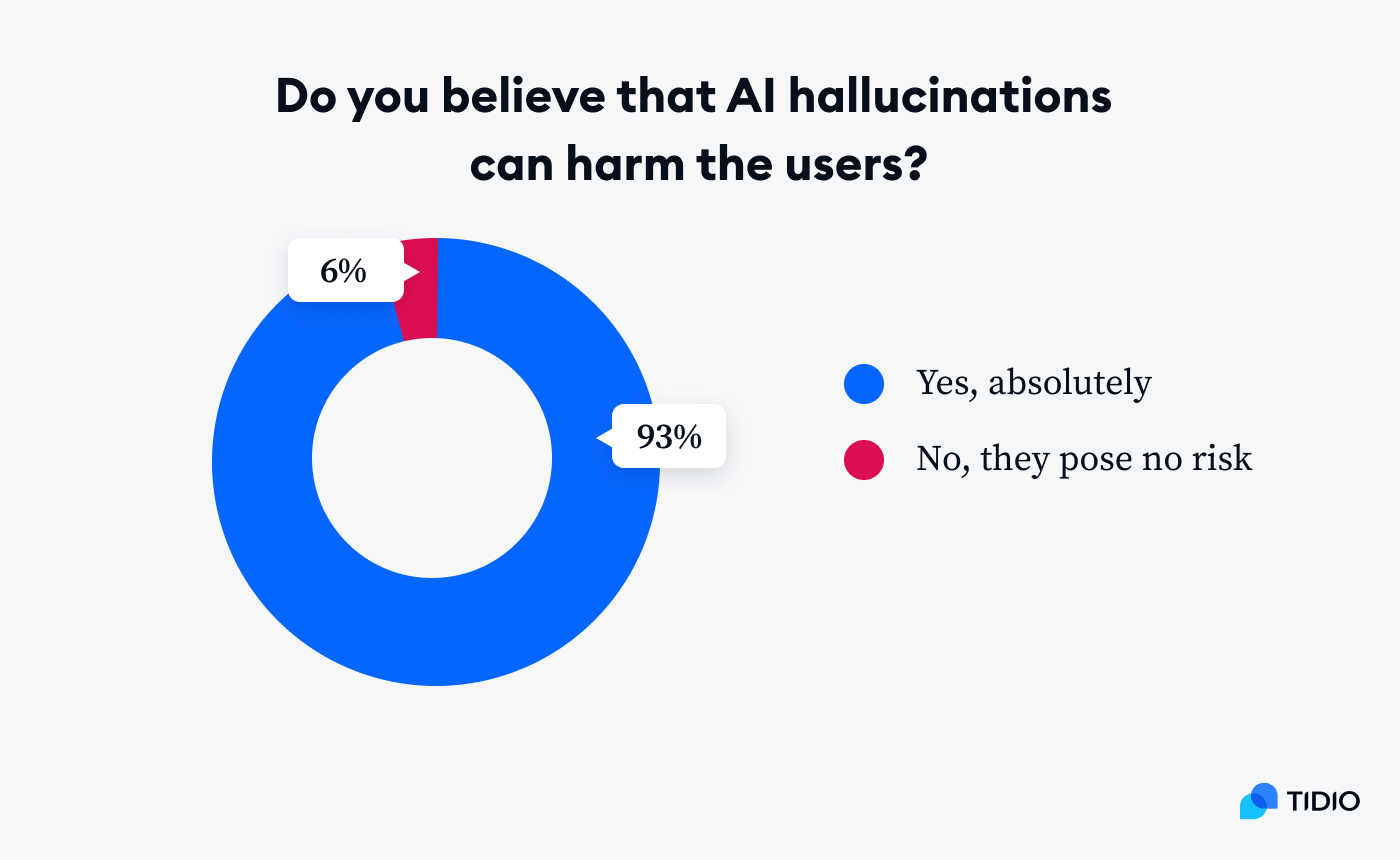

AI hallucinations undermine the trust and reliability of AI systems. Users expect AI to be accurate and dependable, but frequent or severe hallucinations erode confidence. This is particularly concerning in critical applications like healthcare and transportation, where incorrect AI outputs can have serious consequences. The potential harm and risks associated with AI hallucinations necessitate rigorous scrutiny and improvement of AI systems.

Ethical and Legal Concerns

Ethically, AI hallucinations raise questions about the responsibility and accountability of AI developers and operators. Incorrect or dangerous decisions made by AI can have significant repercussions, including financial losses, legal liabilities, and harm to individuals. Regulatory frameworks are increasingly focusing on ensuring that AI systems are transparent, reliable, and accountable, requiring developers to address and mitigate the risk of hallucinations.

Improving Data Quality

Ensuring diverse and representative training datasets is a fundamental step in reducing AI hallucinations. Data preprocessing techniques, such as data augmentation and cleaning, can help mitigate biases and ensure that the training data is comprehensive. These strategies improve the model’s ability to generalise and handle a wide range of scenarios.

Enhancing Algorithms

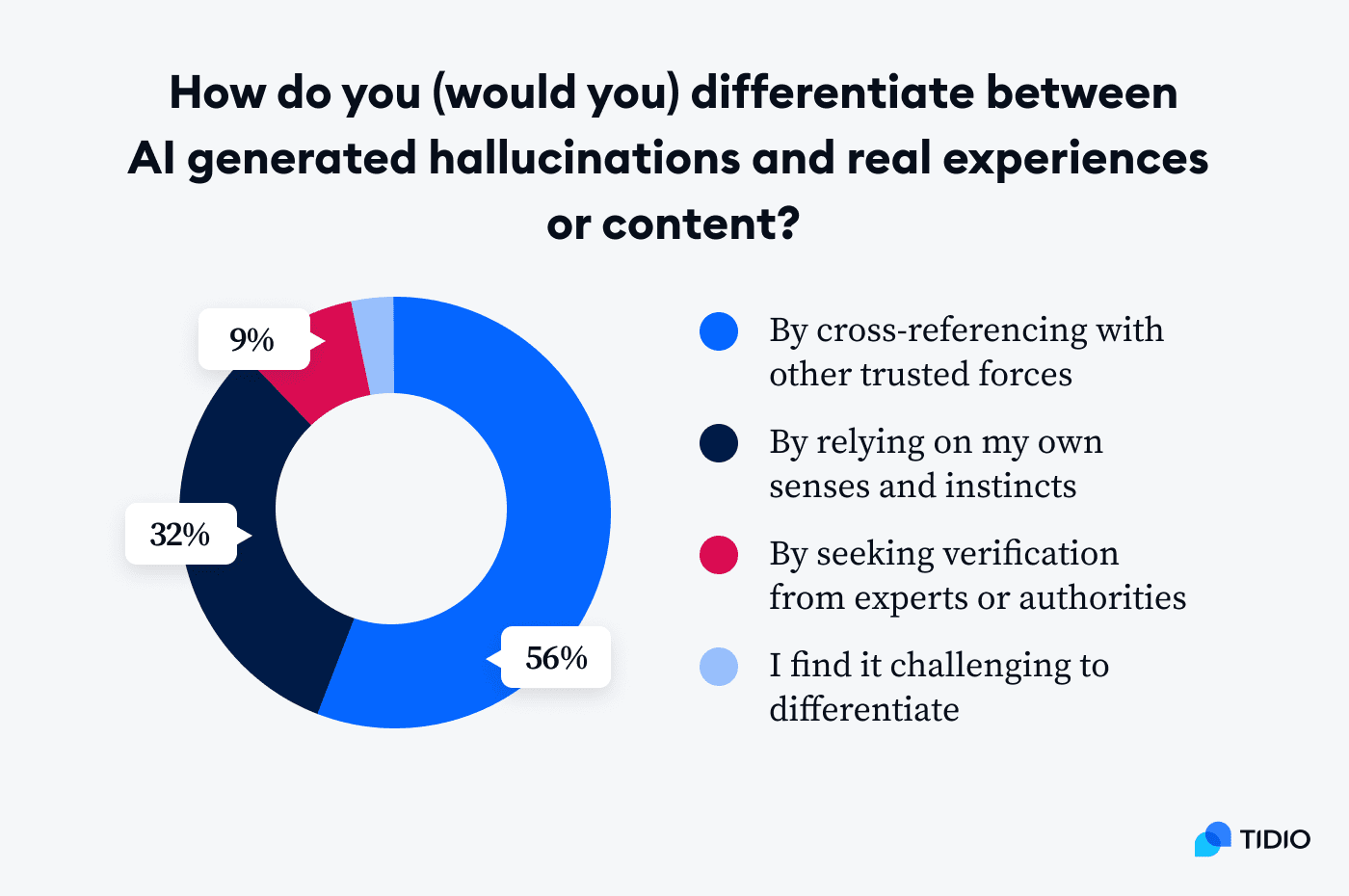

Developing more robust and resilient AI algorithms is crucial. Techniques like uncertainty estimation and confidence scoring can help AI systems gauge the reliability of their predictions and flag potentially hallucinated outputs. Incorporating these mechanisms into AI models can enhance their reliability and trustworthiness.

Systematic Approaches

Thorough testing and validation procedures are essential for detecting and addressing AI hallucinations. Continuous monitoring of AI systems in real-world applications allows for the identification of erroneous behaviours and enables timely updates and corrections. Implementing systematic and rigorous testing frameworks helps ensure that AI systems perform reliably under diverse conditions.

Human-AI Collaboration

Leveraging human oversight is another effective strategy to mitigate AI hallucinations. Hybrid models that combine AI and human intelligence can catch and correct errors that the AI might miss. Human reviewers can provide critical feedback and interventions, enhancing the overall accuracy and reliability of AI systems.

Final Takeaways

AI hallucination is a multifaceted issue with significant implications for the reliability and trustworthiness of AI systems. Understanding its causes, from data-related issues to algorithmic and systematic errors, is crucial for developing effective solutions. Improving data quality, enhancing algorithms, adopting systematic approaches, and fostering human-AI collaboration are key strategies to mitigate AI hallucinations.

As AI continues to evolve, addressing hallucination issues is imperative for ensuring its safe and effective deployment. Ongoing research and development in this area will help build more reliable and trustworthy AI systems, fostering greater confidence in their applications across various domains.